Our vision is to develop methodologies for designing intelligent autonomous decision-making systems that are secure and resilient against malicious adversaries and natural failures.

To do so, we look into these sytems from a security perspective, under various adversary models. Specifically, we develop techniques to assess the risk (i.e., impact and likelihood) of adversaries and failures, and propose methodologies to design and systematically deploy defense measures to prevent, detect, and mitigate malicious attacks and natural disruptive events. In our research, we combine methodologies from cybersecurity, control theory, optimization and machine learning, game-theory and networked systems.

Have a look at a popular science video about our research on developing secure control systems. You can also find some of our recent research themes described at the end of this page.

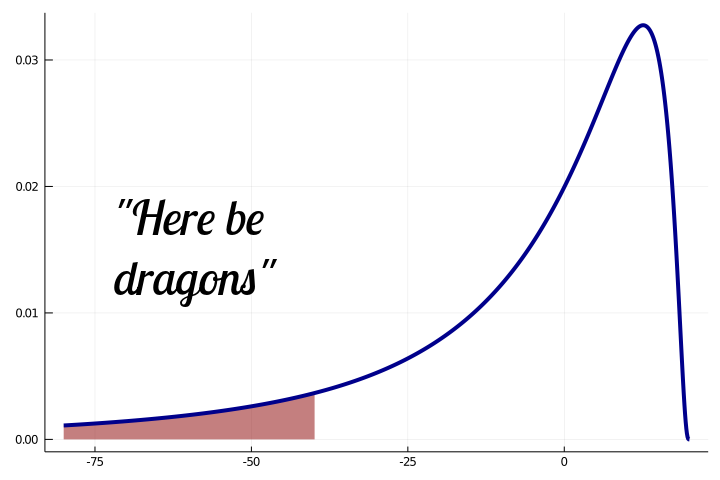

The aim within this theme is to to create novel methodologies addressing cybersecurity problems under uncertainty in learning and control systems. A core element of this research is the development of novel probabilistic risk metrics and optimization-based design methods that jointly consider the impact and the detectability constraints of attacks, as well as model uncertainty and prior beliefs on the adversary model.

Team members: Sribalaji C. Anand, Anh Tung Nguyen, André M. H. Teixeira

@article{Anand_Review2026,

author = {Anand, S. C. and Nguyen, A. T. and Teixeira, A. M. H. and Sandberg, H. and Johansson, K. H.},

journal = {Annual Reviews in Control (Submitted)},

title = {Quantifying Security for Networked Control Systems: A Review},

published = {0},

tag = {10001}

}@article{Nguyen_TAC2026,

author = {Nguyen, A. T. and Anand, S. C. and Teixeira, A. M. H.},

journal = {IEEE Trans. Automatic Control (Accepted)},

title = {Scalable and Optimal Security Allocation in Networks against Stealthy Injection Attacks},

published = {1},

year = {2026},

doi = {10.1109/TAC.2025.3639126},

tag = {10005},

taga = {10001}

}@article{Gallo_Automatica2025,

author = {Gallo, A. J. and Anand, S. C. and Teixeira, A. M. H. and Ferrari, R. M.G.},

journal = {Automatica},

title = {Switching Multiplicative Watermark design against Covert Attacks},

volume = {177},

pages = {112301},

year = {2025},

published = {1},

doi = {10.1016/j.automatica.2025.112301},

tag = {10001}

}@inproceedings{Anand_CDC024,

author = {Anand, S. C. and Grussler, C. and Teixeira, A. M. H.},

title = {Scalable metrics to quantify security of large-scale systems},

year = {2024},

booktitle = {IEEE Conference on Decisions and Control},

doi = {10.1109/CDC56724.2024.10886808},

published = {1},

tag = {10001}

}@article{Anand_TAC2024,

author = {Anand, S. C. and Teixeira, A. M. H. and Ahl\'{e}n, A.},

journal = {IEEE Trans. Automatic Control},

number = {5},

pages = {3214--3221},

title = {Risk Assessment of Stealthy Attacks on Uncertain Control Systems},

volume = {69},

month = may,

year = {2024},

doi = {10.1109/TAC.2023.3318194},

published = {1},

tag = {10001}

}@article{Anand_IEEEOJCSys2023,

author = {Anand, S. C. and Teixeira, A. M. H.},

journal = {IEEE Open Journal of Control Systems},

number = {},

pages = {297--309},

title = {Risk-based Security Measure Allocation Against Actuator Attacks},

volume = {2},

year = {2023},

doi = {10.1109/OJCSYS.2023.3305831},

published = {1},

tag = {10001}

}@inproceedings{AnandCCTA2022,

address = {},

author = {Anand, S. C. and Teixeira, A. M. H. and Ahl\'{e}n, A.},

booktitle = {IEEE Conference on Control Technology and Applications (CCTA)},

title = {Risk assessment and optimal allocation of security measures under stealthy false data injection attacks},

year = {2022},

tag = {10001},

doi = {10.1109/CCTA49430.2022.9966025},

}@inproceedings{Anand_ACC2022,

address = {Atlanta, Georgia, USA},

author = {Anand, S. C. and Teixeira, A. M. H.},

booktitle = {American Control Conference},

title = {Risk-averse controller design against data injection attacks on actuators for uncertain control systems},

year = {2022},

doi = {10.23919/ACC53348.2022.9867257},

tag = {10001},

}@incollection{Teixeira_Springer2021,

author = {Teixeira, Andr{\'e} M. H.},

editor = {Ferrari, Riccardo M.G. and Teixeira, Andr{\'e} M. H.},

title = {Security Metrics for Control Systems},

booktitle = {Safety, Security and Privacy for Cyber-Physical Systems},

year = {2021},

publisher = {Springer International Publishing},

address = {Cham},

pages = {1--8},

isbn = {978-3-030-65048-3},

doi = {10.1007/978-3-030-65048-3_6},

tag = {10001}

}

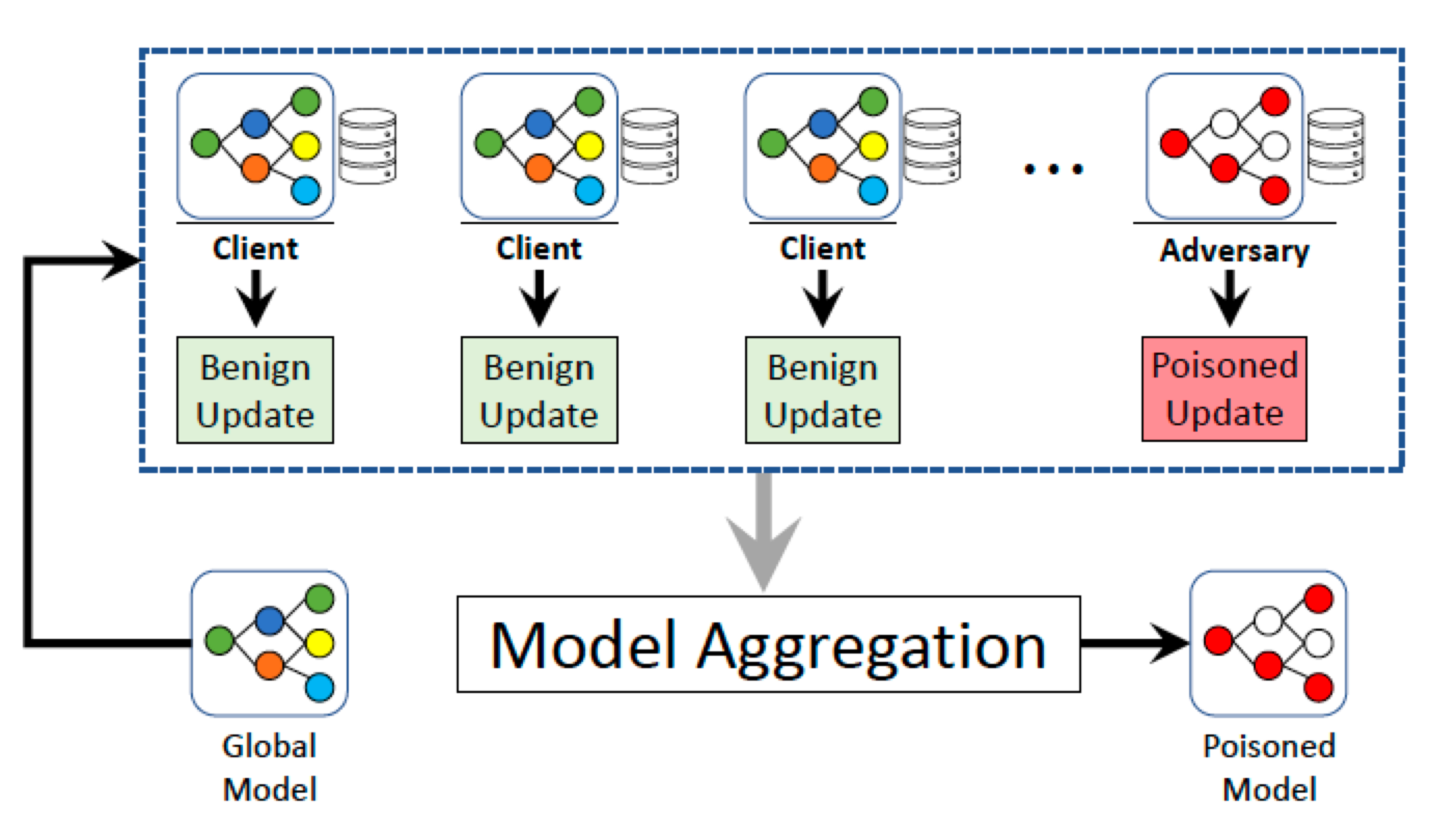

Federated machine learning (FedML) has proven to be a suitable approach for privacy-preserving machine learning across a large number of heterogeneous devices. Our group addresses concerns related to security and privacy in federated machine learning against model poisoning and information leakage attacks. The approach is centered around developing new theories and methodologies to achieve two main aims: secure aggregation of local models under poisoning attacks; private distributed aggregation of local models.

Team members: Usama Zafar, Javad Parsa, Salman Toor, André M. H. Teixeira

@article{Zafar_TDSC2025,

title = {Robust Federated Learning Against Poisoning Attacks: A GAN-Based Defense Framework},

journal = {(In preparation)},

author = {Zafar, Usama and Teixeira, André M. H. and Toor, Salman},

year = {},

published = {0},

tag = {10003}

}@inproceedings{Parsa_ICLR2026,

author = {Parsa, J. and Daghestani, A. H. and Teixeira, A. M. H. and Johansson, M.},

title = {Byzantine-Robust Federated Learning with Learnable Aggregation Weights},

booktitle = {ICLR 2026 (Accepted)},

published = {1},

year = {2026},

tag = {10003},

}@inproceedings{Ju2024,

author = {Ju, L. and Zhang, T. and Toor, S. and Hellander, A.},

booktitle = {IEEE Transactions on Machine Learning in Communications and Networking},

title = {Accelerating Fair Federated Learning: Adaptive Federated Adam},

pages = {1017-1032},

volume = {2},

published = {1},

year = {2024},

doi = {10.1109/TMLCN.2024.3423648},

tag = {10003}

}@inproceedings{Ekmefjord_CCGrid2022,

address = {Taormina, Italy},

author = {Ekmefjord, M. and Ait-Mlouk, A. and Alawadi, S. and Åkesson, M. and Singh, P. and Spjuth, O. and Toor, S. and Hellander, A.},

booktitle = {Symposium on Cluster, Cloud and Internet Computing},

title = {Scalable federated machine learning with FEDn},

year = {2022},

doi = {10.1109/CCGrid54584.2022.00065},

tag = {10003},

}

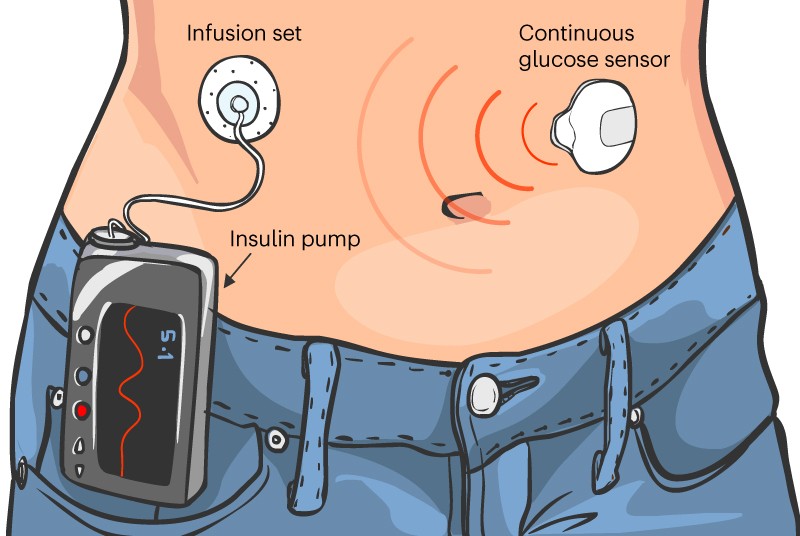

Artificial pancreas are envisioned medical systems whose function is to automatically regulate the blood glucose levels in patients with diabetes, with little to none human initervention. At the core of these systems we have an intellligent device autonomously deciding how much synthetic insulin and glucagon to infuse into the body through infusion pumps, based on data received from sensors located thoughout the body measuring, for instance, blood glucose levels in real-time. Data exchange among the controlling device, the pumps, and the sensors is critical. The whole system must operate safely, even in the presence of adversaries tampering with the communication or devices.

In this line of research, we develop schemes to monitor the sensor reading to detect anomalies, and distinguish them from natural unknown disturbances, such as meal intakes, physical exercise, among others.

Team members: Fatih Emre Tosun, André M. H. Teixeira

@article{Tosun_EJC2025,

author = {Tosun, F. E. and Teixeira, A. M. H. and Dong, J. and Ahlén, A. and Dey, S.},

journal = {European Journal of Control},

volume = {},

number = {},

pages = {101427},

title = {Kullback-Leibler Divergence-Based Filter Design Against Bias Injection Attacks},

year = {2025},

published = {1},

doi = {10.1016/j.ejcon.2025.101427},

tag = {10002}

}@article{Tosun_TIFS2025,

author = {Tosun, F. E. and Teixeira, A. M. H. and Dong, J. and Ahlén, A. and Dey, S.},

journal = {IEEE Trans. Information Forensics and Security},

volume = {20},

number = {},

pages = {2763-2777},

title = {Kullback-Leibler Divergence-Based Observer Design Against Sensor Bias Injection Attacks in Single-Output Systems},

year = {2025},

published = {1},

tag = {10002},

doi = {10.1109/TIFS.2025.3546167}

}@inproceedings{Tosun_SAFEPROCESS2024,

author = {Tosun, F. E. and Teixeira, A. M. H. and Ahlén, A. and Dey, S.},

booktitle = {12th IFAC Symposium on Fault Detection, Supervision and Safety for Technical Processes},

title = {Kullback-Leibler Divergence-Based Detector Design Against Bias Injection Attacks in an Artificial Pancreas System},

year = {2024},

published = {1},

doi = {10.1016/j.ifacol.2024.07.269},

tag = {10002}

}@article{Tosun_JPC2024,

author = {Tosun, F. E. and Teixeira, A. M. H. and Abdalmoaty, M. and Ahl\'{e}n, A. and Dey, S.},

journal = {Journal of Process Control},

volume = {153},

number = {103162},

title = {Quickest Detection of Bias Injection Attacks on the Glucose Sensor in the Artificial Pancreas Under Meal Disturbances},

year = {2024},

doi = {10.1016/j.jprocont.2024.103162},

published = {1},

tag = {10002}

}@inproceedings{Tosun_CDC2023,

address = {},

author = {Tosun, F. E. and Teixeira, A. M. H.},

booktitle = {IEEE Conference on Decisions and Control},

title = {Robust Sequential Detection of Non-stealthy Sensor Deception Attacks in an Artificial Pancreas System},

year = {2023},

published = {1},

tag = {10002}

}@inproceedings{Tosun_ACC2022,

address = {Atlanta, Georgia, USA},

author = {Tosun, F. E. and Teixeira, A. M. H. and Ahl\'{e}n, A. and Dey, S.},

booktitle = {American Control Conference},

title = {Detection of Bias Injection Attacks on the Glucose Sensor in the Artificial Pancreas Under Meal Disturbances},

year = {2022},

doi = {10.23919/ACC53348.2022.9867556},

tag = {10002},

}

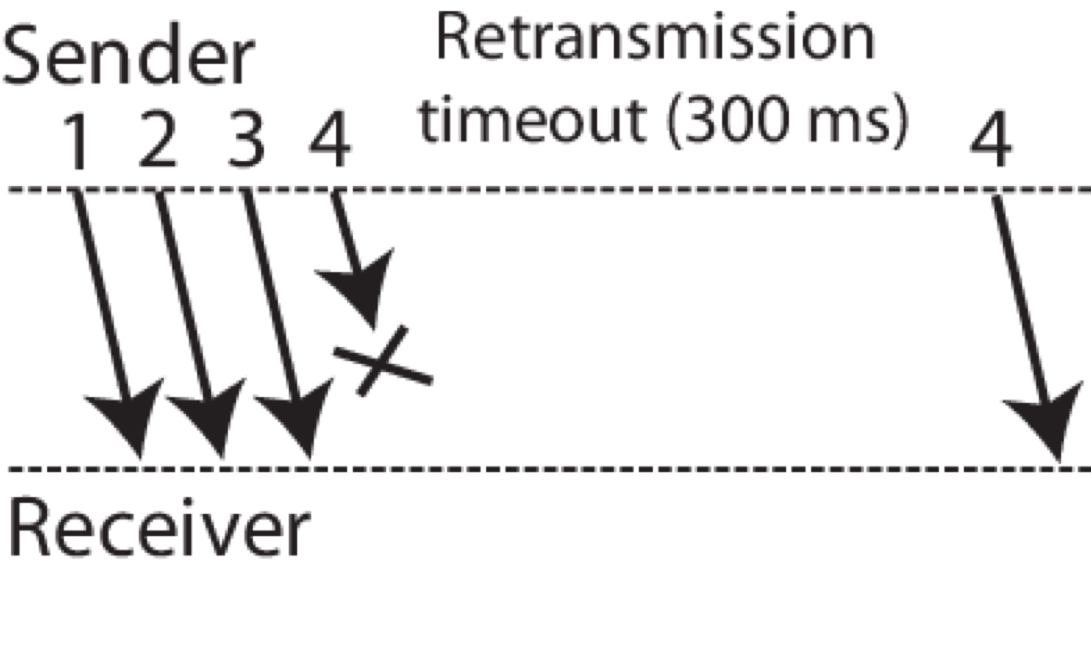

Feedback loop delay is known to impose limitations on the achievable performance of control systems. In particular, delays can increase oscillations, reduce regulation accuracy, and may cause destabilization of the control system. Large enough delays may also cause the loss of communication packets between the sensors, the controller, and the actuators, resulting in denial-of-service at the controller. Delays and packet losses are important aspects to be considered in the context of control over wireless communication networks. Unfortunately, delays can also be induced by malicious cyber-attacks that aim to disrupt the system. In the security context, it is important to understand how delays may be induced by adversaries and how the attacks may be disguised as natural properties of the communication channel. Our group investigates novel control-theoretic approaches for understanding, detecting, and mitigating attack-induced delays and packet losses, combining techniques from system identification, anomaly detection, and robust control.

Team members: Torbjörn Wigren, Ruslan Seifullaev, André M. H. Teixeira

@inproceedings{Seifullaev_CDC024,

author = {Seifullaev, R. and Teixeira, A. M. H. and Ahl\'{e}n, A.},

booktitle = {IEEE Conference on Decisions and Control},

title = {Event-triggered control of nonlinear systems under deception and Denial-of-Service attacks},

year = {2024},

doi = {10.1109/CDC56724.2024.10886155},

published = {1},

tag = {10004}

}@inproceedings{Wigren_ECC2024,

author = {Wigren, T. and Teixeira, A. M. H.},

booktitle = {European Control Conference},

title = {Delay Attack and Detection in Feedback Linearized Control Systems},

year = {2024},

published = {1},

tag = {10004}

}@inproceedings{Wigren_ECC2025,

author = {Wigren, T.},

booktitle = {European Control Conference},

title = {Convergence in delayed recursive identification of nonlinear systems},

year = {2024},

published = {1},

tag = {10004}

}@inproceedings{Wigren_CDC2023,

address = {},

author = {Wigren, T. and Teixeira, A. M. H.},

booktitle = {IEEE Conference on Decisions and Control},

title = {Feedback Path Delay Attacks and Detection},

year = {2023},

published = {1},

tag = {10004}

}@inproceedings{WigrenIFAC2023,

address = {},

author = {Wigren, T. and Teixeira, A. M. H.},

booktitle = {IFAC World Congress},

title = {On-line Identification of Delay Attacks in Networked Servo Control},

year = {2023},

tag = {10004}

}

Sustained use of critical infrastructure, such as electrical power and water distribution networks, requires efficient management and control. Facilitated by the advancements in computational devices and non-proprietary communication technology, such as the Internet, the efficient operation of critical infrastructure relies on network decomposition into interconnected subsystems, thus forming networked control systems. However, the use of public and pervasive communication channels leaves these systems vulnerable to cyber attacks. This theme aims to create novel methodologies to enhance the security and resilience of networked dynamical systems under cyber attacks.

Team members: Anh Tung Nguyen, Alain Govaert, André M. H. Teixeira, Sérgio Pequito

@article{Arnstrom_SCL2025,

author = {Arnstr\"{o}m, D. and Teixeira, A. M. H.},

journal = {System \& Control Letters (Submitted)},

volume = {},

number = {},

pages = {},

title = {Efficiently Computing the Cyclic Output-to-Output Gain},

year = {},

published = {0},

tag = {10005}

}@inproceedings{Seifullaev_IFACWC2026,

author = {Seifullaev, R. and Teixeira, A. M. H.},

title = {Impact analysis of hidden faults in nonlinear control systems using output-to-output gain},

booktitle = {IFAC World Congress (Submitted)},

published = {0},

year = {},

tag = {10005}

}@inproceedings{Seifullaev_ECC2026,

author = {Seifullaev, R. and Teixeira, A. M. H.},

title = {An H2-norm approach to performance analysis of networked control systems under multiplicative routing transformations},

booktitle = {European Control Conference (Submitted)},

published = {0},

year = {},

tag = {10005}

}@article{Gallo_TAC2025,

author = {Gallo, A. and Nguyen, A. T. and Oliva, G. and Teixeira, A. M. H.},

journal = {IEEE Trans. Automatic Control (Submitted)},

number = {},

pages = {},

title = {On the Boundedness of the Solution to the Output-to-output l2-gain Strategic Stealthy Attacks Problem},

volume = {},

year = {},

published = {0},

tag = {10005}

}@inproceedings{Dong_ACC2026,

author = {Dong, J. and Zhang, K. and Nguyen, A. T. and Teixeira, A. M. H.},

title = {Fundamental limitations of sensitivity metrics for anomaly impact analysis in LTI systems},

booktitle = {American Control Conference (Accepted)},

published = {1},

year = {2026},

tag = {10005}

}@article{Nguyen_TAC2026,

author = {Nguyen, A. T. and Anand, S. C. and Teixeira, A. M. H.},

journal = {IEEE Trans. Automatic Control (Accepted)},

title = {Scalable and Optimal Security Allocation in Networks against Stealthy Injection Attacks},

published = {1},

year = {2026},

doi = {10.1109/TAC.2025.3639126},

tag = {10005},

taga = {10001}

}@inproceedings{Zhang_CDC2025,

author = {Zhang, Kangkang and Kasis, Andreas and Teixeira, A. M. H. and Jiang, Bin},

title = {Vulnerability Analysis against Stealthy Integrity Attacks for Nonlinear Systems},

booktitle = {IEEE Conf. on Decision and Control (CDC)},

published = {1},

year = {2025},

tag = {10005}

}@inproceedings{Nguyen_NecSys2025,

author = {Nguyen, A. T. and Anand, S. C. and Teixeira, A. M. H.},

title = {Security Metrics for Uncertain Interconnected Systems under Stealthy Data Injection Attacks},

booktitle = {10th IFAC Conference on Networked Systems (NecSys)},

published = {1},

year = {2025},

tag = {10005}

}@inproceedings{Arnstrom_L4DC2025,

author = {Arnstr\"{o}m, D. and Teixeira, A. M. H.},

title = {Data-Driven and Stealthy Deactivation of Safety Filters},

booktitle = {Annual Learning for Dynamics & Control Conference (L4DC)},

published = {1},

year = {2025},

tag = {10005}

}@inproceedings{Anand_ECC2025,

author = {Anand, S. C. and Chong, M. S. and Teixeira, A. M. H.},

title = {Data-Driven Identification of Attack-free Sensors in Networked Control Systems},

booktitle = {European Control Conference},

published = {1},

year = {2025},

tag = {10005}

}@article{Nguyen_TCNS2024,

author = {Nguyen, A. T. and Teixeira, A. M. H. and Medvedev, A.},

journal = {IEEE Trans. Control of Network Systems},

title = {Security Allocation in Networked Control Systems under Stealthy Attacks},

volume = {12},

number = {1},

pages = {216-227},

doi = {10.1109/TCNS.2024.3462546},

month = mar,

year = {2025},

published = {1},

tag = {10005}

}@inproceedings{Nguyen_CRITIS24,

author = {Nguyen, A. T. and Hertzberg, A. and Teixeira, A. M. H.},

title = {Centrality-based Security Allocation in Networked Control Systems},

year = {2025},

editor = {Oliva, Gabriele and Panzieri, Stefano and H{\"a}mmerli, Bernhard and Pascucci, Federica and Faramondi, Luca},

booktitle = {Critical Information Infrastructures Security},

publisher = {Springer Nature Switzerland},

address = {Cham},

pages = {212--230},

note = {Presented at the 19th International Conference on Critical Information Infrastructures Security},

doi = {10.1007/978-3-031-84260-3_13},

published = {1},

tag = {10005}

}@phdthesis{Nguyen_Lic2023,

author = {Nguyen, Anh Tung},

title = {Security Allocation in Networked Control Systems},

school = {Uppsala University},

year = {2023},

address = {Uppsala, Sweden},

month = oct,

type = {Licentiate thesis},

tag = {10005}

}@inproceedings{Ramos_CDC2023,

author = {Ramos, G. and Teixeira, A. M. H. and Pequito, S.},

booktitle = {IEEE Conference on Decisions and Control},

title = {On the trade-offs between accuracy, privacy, and resilience in average consensus algorithms},

year = {2023},

published = {1},

tag = {10005},

taga = {10006}

}@inproceedings{Li_CDC2023,

address = {},

author = {Li, Z. and Nguyen, A. T. and Teixeira, A. M. H. and Mo, Y. and Johansson, K. H.},

booktitle = {IEEE Conference on Decisions and Control},

title = {Secure State Estimation with Asynchronous Measurements against Malicious Measurement-data and Time-stamp Manipulation},

year = {2023},

published = {1},

tag = {10005}

}@inproceedings{NguyenIFAC2023,

address = {},

author = {Nguyen, A. T. and Anand, S. C. and Teixeira, A. M. H. and Medvedev, A.},

booktitle = {IFAC World Congress},

title = {Optimal Detector Placement in Networked Control Systems under Cyber-attacks with Applications to Power Networks},

tag = {10005},

year = {2023},

}@inproceedings{NguyenCDC2022,

address = {},

author = {Nguyen, A. T. and Anand, S. C. and Teixeira, A. M. H.},

booktitle = {IEEE Conference on Decision and Control (CDC)},

title = {A Zero-Sum Game Framework for Optimal Sensor Placement in Uncertain Networked Control Systems under Cyber-Attacks},

year = {2022},

doi = {10.1109/CDC51059.2022.9992468},

tag = {10005}

}@inproceedings{NguyenNecsys2022,

address = {},

author = {Nguyen, A. T. and Teixeira, A. M. H. and Medvedev, A.},

booktitle = {IFAC Conference on Networked Systems (NecSys)},

title = {A Single-Adversary-Single-Detector Zero-Sum Game in Networked Control Systems},

year = {2022},

doi = {10.1016/j.ifacol.2022.07.234},

tag = {10005},

}

Guaranteeing privacy in dynamical networks is particularly important in the pressing concern of privacy in distributed optimization scenarios common in machine learning and artificial intelligence. The intrinsic design of these networks traditionally depends on implicit trust among agents, raising significant privacy issues. We propose a novel approach that integrates control theory and optimization techniques to address these privacy concerns. Our approach aims to refine network architectures and communication protocols, ensuring that the privacy of individual agents is preserved while maintaining the efficacy of collective decision-making processes. This advancement in network design is poised to substantially improve the handling of privacy in dynamical networks, facilitating their reliable and private application in various settings.

Team members: André M. H. Teixeira, Sérgio Pequito

@article{Ramos_TAC2025,

author = {Ramos, G. and Silvestre, D. and Teixeira, A. M. H. and Pequito, S.},

journal = {IEEE Trans. Automatic Control (Submitted)},

number = {},

pages = {},

title = {Accurate Average Consensus with Joint Resilience and Privacy Guarantees},

volume = {},

year = {},

published = {0},

tag = {10006}

}@article{Ramos_TAC2024,

author = {Ramos, G. and Aguiar, A. P. and Kar, Soummya and Pequito, S.},

journal = {IEEE Trans. Automatic Control (Accepted)},

title = {Privacy preserving average consensus through network augmentation},

year = {2023},

published = {1},

tag = {10006}

}@article{Ramos_CSL2024,

author = {Ramos, G. and Pequito, S.},

journal = {Systems & Control Letters},

volume = {180},

pages = {105608},

year = {2023},

title = {Designing communication networks for discrete-time consensus for performance and privacy guarantees},

doi = {10.1016/j.sysconle.2023.105608},

published = {1},

tag = {10006}

}@inproceedings{Ramos_CDC2023,

author = {Ramos, G. and Teixeira, A. M. H. and Pequito, S.},

booktitle = {IEEE Conference on Decisions and Control},

title = {On the trade-offs between accuracy, privacy, and resilience in average consensus algorithms},

year = {2023},

published = {1},

tag = {10005},

taga = {10006}

}@inproceedings{AbdalmoatyIFAC2023,

address = {},

author = {Abdalmoaty, M. and Anand, S. C. and Teixeira, A. M. H.},

booktitle = {IFAC World Congress},

title = {Privacy and Security in Network Controlled Systems via Dynamic Masking},

year = {2023},

video = {https://youtu.be/uuz5ppriWLk},

tag = {10006}

}